Virtual Costuming & Motion Capture Research

A series of research projects that started in the summer of 2021 and continuing into the future focused around pushing the boundaries of theatrical and live costume design into virtual spaces such as Zoom, Virtual Reality, game engines and projection designs and how to utilize new and emerging technologies to create the live theatrical entertainment of the future.

This page is constantly updated with progress as it occurs and is documented

History of Research

Summer 2021

Research into virtual costume and character design, mainly focused around Unreal Engine’s MetaHuman project and CLO3d, a virtual draping, sewing and fabric simulation program that is typically used in the fashion industry, in order to see how these programs could be incorporated into a traditional live theatre production workflow. Stemming from this research, the skirts that need to turn into pants for England’s Splendid Daughters were draped, prototyped, printed and then physically sewn for use on stage utilizing CLO3d as the main design engine instead of a typical hand patterning or drafting workflow. Additionally, the beginnings of taking a virtual draped costume and applying it to a virtual actor was attempted, though due to resource limitations put on the back burner.

Summer-Winter 2022

Research shifted into character and costume design for a live interactive devised theatrical production Pieces of Me in which a single actor in a motion capture suit with a facial camera would inhabit three separate characters (The Guide, The Owl, and The Dancer) in a virtual environment to tell a story of ecological destruction and rebirth. The audience would accessed the show via Meta Quest 2 virtual reality headsets and were able to interact with the actor and world to accomplish a variety of tasks that affected the story. For this design, the programs of 3DS Max, the Unity game engine, and Motive by OptiTracks were used in tandem to create these unique abstract characters, bring them to life, and link them to a human bodies motions (realistic or otherwise). This research, along with an installation piece Concrete Oasis led to an interest in merging the actual and virtual together in costume design to create an interactive multimedia experience workflow that would work for any type of live entertainment - theatre, fashion shows, installation pieces, and the like.

Spring-Summer 2023

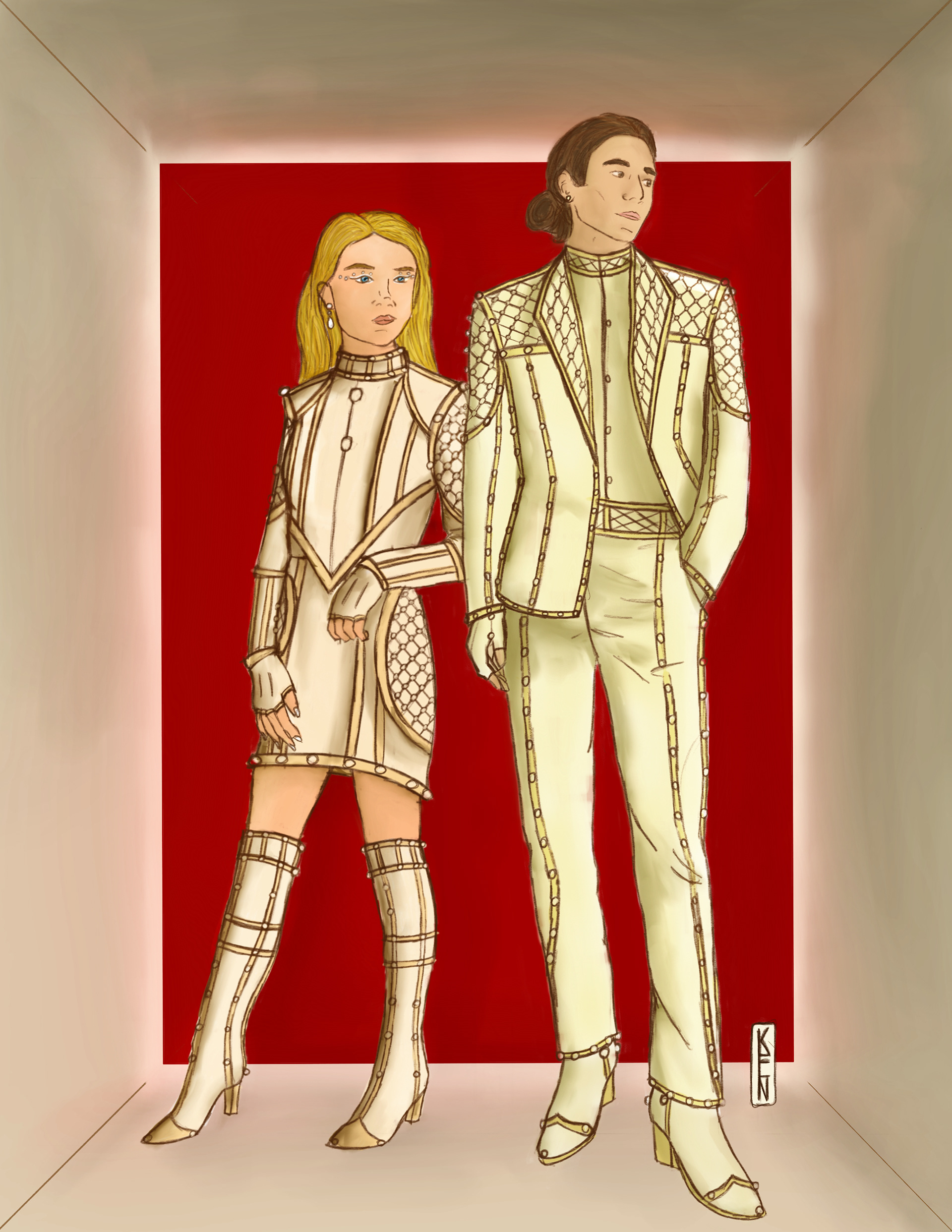

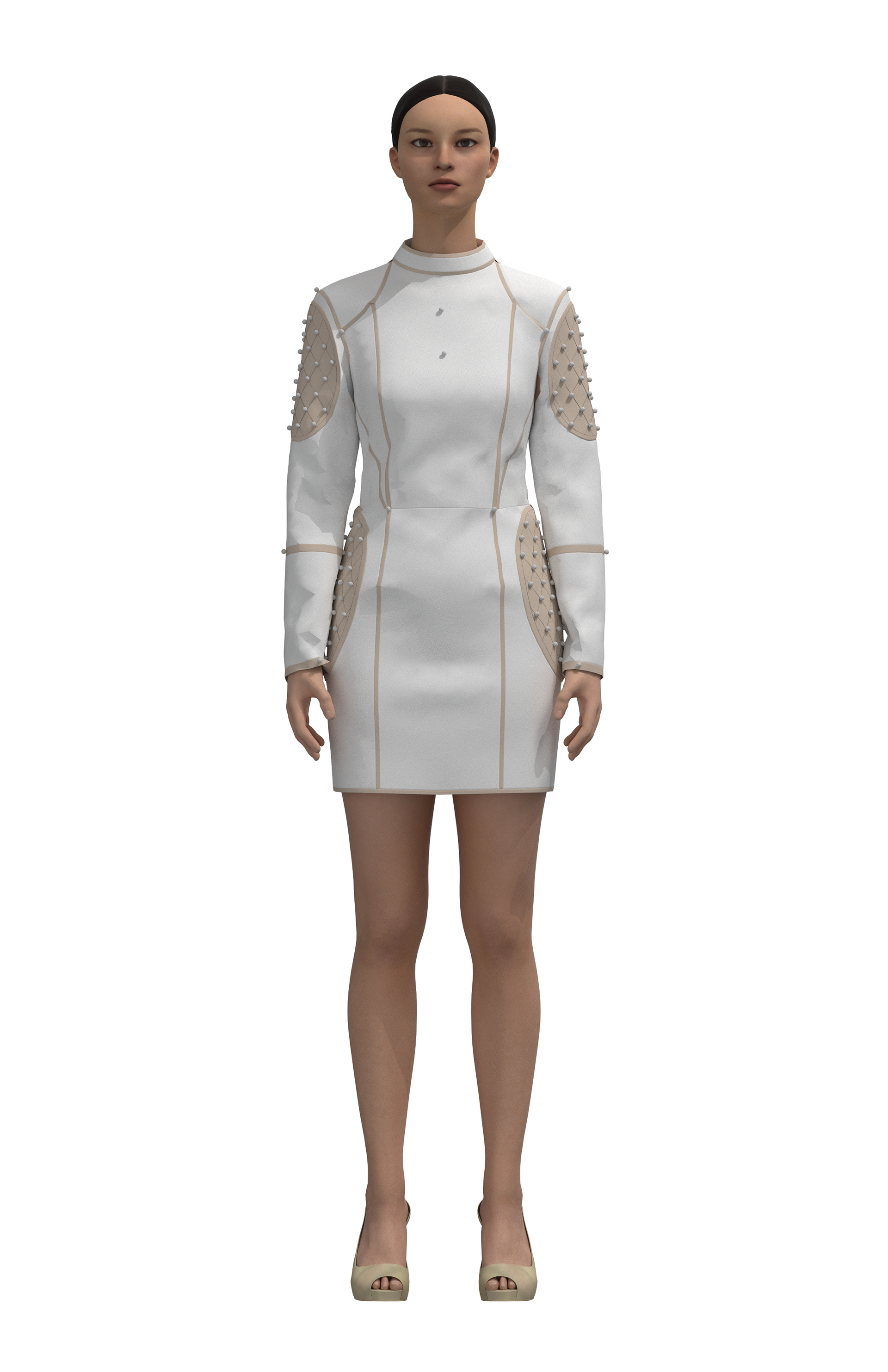

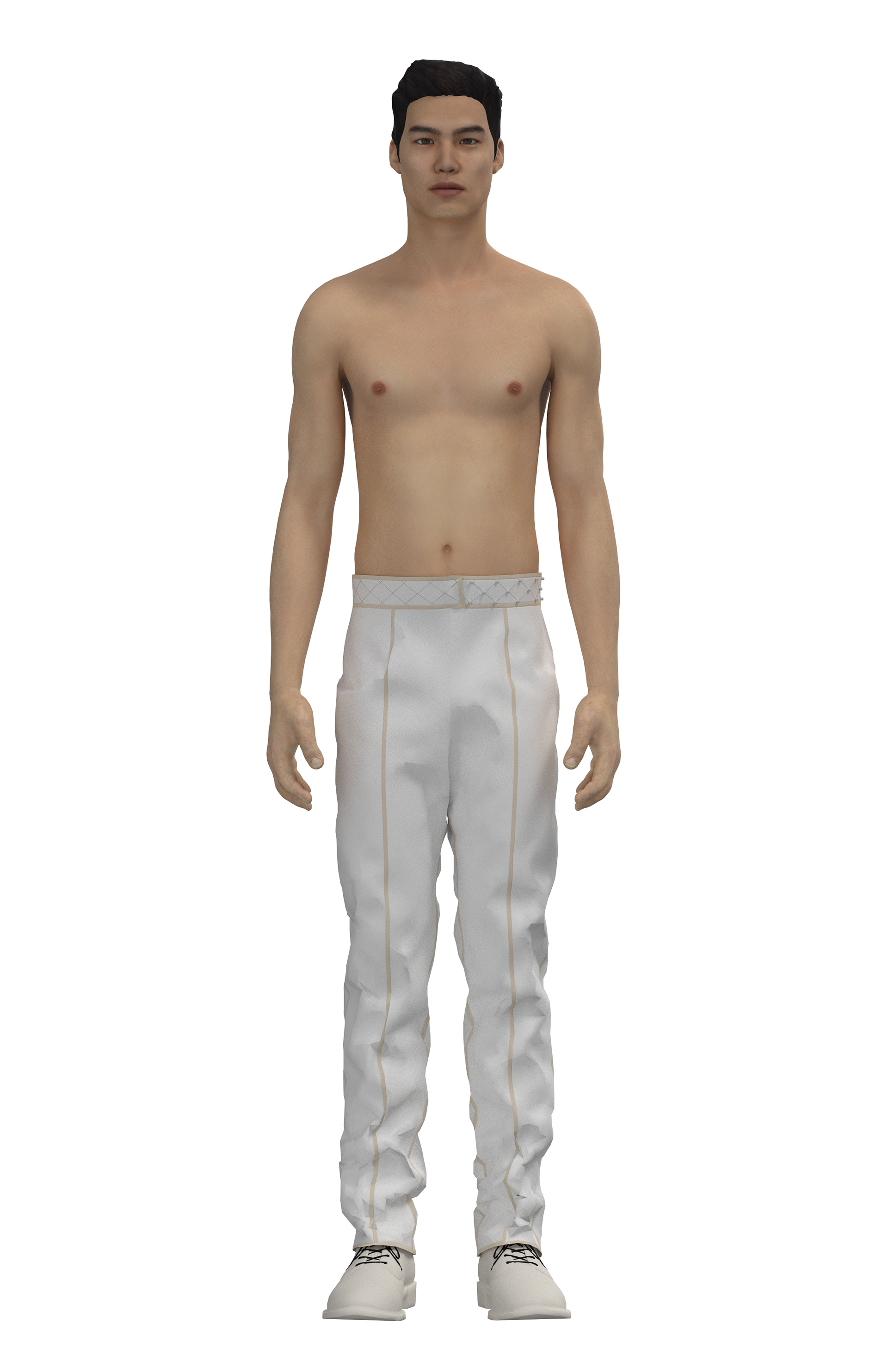

With a major focus on CLO3d for actor measurement accurate costume design that could be both produced actually while also being used in a virtual environment, research shifted to creating a virtual-actual symbiosis in a cohesive design and showcase. Research was conducted into hiding motion capture markers into a costume for subtle skeletal tracking, CLO3d garments were designed and physically created along with being placed on identical MetaHuman avatars in Unreal Engine, and these tools were used to experiment with projections, 360 degree projection mapping on garments, and a hybrid virtual reality and real life experience. The end goal is the creation of a ‘fashion show’ of sorts, somewhere between an art installation and a show, to showcase the abilities of incorporating these emerging technologies into a traditional theatrical workflow and how these technologies can create new and unique experiences for an audience.

Additionally, the research into incorporating emerging technologies into live entertainment such as theatre is being written up for USITT Theatre Design and Technology, along with as a guidebook for specific workflows to integrate costumes, sets, props, lighting and media designs from commonly used theatrical programs into Unreal Engine for use in prototyping and motion capture projects.

MORE PHOTOS AND DOCUMENTATION TO COME